User guide

10Intro

In this user guide, you’ll find advice on how to get the most out of Stable Audio, generative AI techniques, information about our AI models and training data.

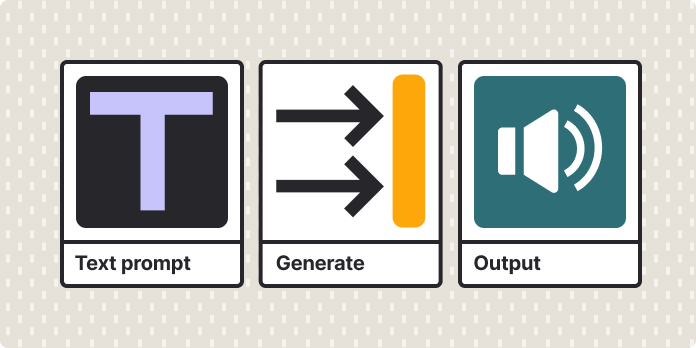

Text-to-audio

Text prompts refer to the text you use to describe how you want your audio to sound.

Learn how to get the best audio output using text prompts.

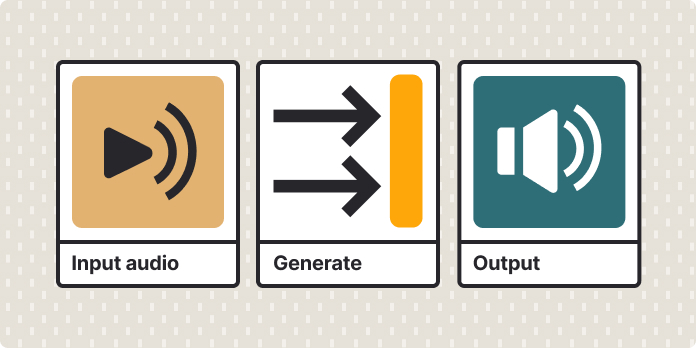

Audio-to-audio

Add audio into the AI generation process to guide the output towards your desired music or sound effects goal.

Training data

Our first models are trained exclusively on music provided by our partner AudioSparx.